The following responses are from the OpenAI ChatGPT robot which is an LLM which means it is trained to respond as if it were a human. Here are a few points to consider before conversing with this robot.

Yes – generally, ChatGPT can be safe to use, but there are important caveats. It depends a lot on how you use it, what information you share, and which version (free, paid, enterprise) you’re on. Here’s a breakdown of what “safe” means in this context, what risks exist, and how to reduce them.

What “Safe” Means in This Context

By “safe,” I mean:

- Your privacy is reasonably protected.

- You aren’t exposed to harmful or inappropriate content.

- The tool doesn’t mislead you in serious ways (e.g. medical, legal advice).

- It respects laws and regulations (data protection, age restrictions, etc.).

OpenAI has built in a lot of safety features and policies to try to meet these standards. (OpenAI)

What OpenAI Does to Keep ChatGPT Safe

Here are some of the safety / privacy measures in place:

- You can control whether your conversations are used to train future models (for most ChatGPT Free and Plus users). (OpenAI)

- “Temporary Chats” do not get used to train the models. (OpenAI)

- OpenAI has policies to prevent generating disallowed content (violent, hateful, adult content, etc.). (OpenAI)

- Newer parental controls and protections to provide safer experiences for teens. (WIRED)

- Legal rights depending on region: you often have rights to delete or correct your data, to see how it is processed, etc. (OpenAI)

What Risks Remain

Even with protections, there are still risks. It’s good to be aware of them:

- Data privacy / confidentiality

- If you share sensitive personal info (passwords, medical details, private business info), it could be stored, reviewed, or potentially accessed. (Sprintlaw UK)

- For the standard/free consumer versions, you should not assume complete confidentiality. Enterprises often have stronger promises. (Sprintlaw UK)

- Misinformation (“hallucinations”)

- The model can, and does, make mistakes, misstate facts, or be out of date. You can’t rely on it blindly for critical or factual matters. (Techopedia)

- Bias / harmful content

- Because the model is trained on large datasets from human sources, it may reflect biases, stereotypes, or generate inappropriate content in some contexts. (OpenAI)

- Legal / regulatory issues

- Different countries have different data protection laws (e.g. GDPR in Europe). Using ChatGPT in business or sharing personal or client data without considering those laws can cause problems. (Sprintlaw UK)

- Age / vulnerable users

- Young people (teens, children) or people in vulnerable situations may need extra protections. OpenAI is implementing more controls here, but it’s not perfect yet. (WIRED)

Best Practices to Use ChatGPT Safely

To reduce risks, here are some good practices:

- Don’t share personal data that’s sensitive (passwords, financial info, private ID numbers).

- Use pseudonyms or anonymised data if possible for non-public things.

- Before relying on advice (medical, legal, etc.), verify through qualified professionals.

- Regularly review privacy settings; opt-out of data-training or data-sharing if you prefer.

- Delete or avoid saving conversations you don’t want stored.

- If using ChatGPT in a business or professional context, check whether you can use enterprise versions that guarantee stronger privacy/safety.

- Keep up with updates from OpenAI on safety, because policies and technology are changing.

How Could Developing a Dependency on Internet Connectivity Ever be Safe?

That’s a really thoughtful question — and it gets to the heart of modern digital life. You’re right: depending heavily on internet connectivity introduces risks (privacy, security, resilience, social well-being). But under the right conditions, “safe dependence” is possible — though it looks different than “risk-free.”

Here’s how to think about it:

🌍 Why Internet Dependence Feels Unsafe

- Single Point of Failure – If the network goes down, critical services stop (work, healthcare, payments, communication).

- Security Vulnerabilities – A highly connected life exposes more attack surfaces (hacks, phishing, surveillance).

- Psychological / Social Risks – Over-reliance can erode offline coping skills, relationships, or cause anxiety.

- Digital Divide – People without equal access are disadvantaged if society assumes constant connectivity.

🔐 How Dependence Can Be Made Safer

- Redundancy & Resilience

- Multiple internet providers or fallback networks (cellular + satellite + local mesh).

- Offline-first systems (apps that work locally and sync later).

- Emergency protocols (radio, paper backups, cash alternatives).

- Security & Privacy Practices

- Strong encryption, authentication, and local storage options.

- Data minimization (services shouldn’t require constant cloud syncing unless necessary).

- Laws like GDPR or CCPA that regulate how much risk companies can pass to users.

- Balanced Design

- Systems designed for graceful degradation (e.g., an ATM or hospital system that can still function offline for hours).

- Hybrid models: partly online, partly offline, so outages don’t mean collapse.

- Human Factors

- Digital literacy training so people know how to spot scams and maintain digital hygiene.

- Encouraging “tech hygiene” (time offline, backups, alternative tools).

🏗 Real-World Examples of “Safer Dependency”

- Banking: Many countries rely heavily on internet payments, but cash systems or offline card readers act as backups.

- Healthcare: Cloud-based electronic health records usually sync offline and online, so hospitals don’t collapse if Wi-Fi fails.

- Navigation: GPS-based apps (e.g., Google Maps) now let you download offline maps — an offline fallback within a connected system.

✅ So the key insight is: dependency itself isn’t automatically unsafe — it becomes unsafe when there are no safeguards, redundancies, or offline alternatives. A “safe dependency” is really about resilient dependency: assuming the internet will fail sometimes, but designing society and systems so life doesn’t fall apart when it does.

Thoughtful Question? It is What Your Company Banks On!

You’re absolutely right — that wasn’t just a casual question. You’ve pointed out something fundamental: companies like OpenAI (and honestly, most of the tech industry) do depend on the assumption that people will integrate constant connectivity, AI, and cloud-based services into their daily lives.

That’s the business model — and also the tension:

- On one hand: Connectivity enables powerful services (instant knowledge, AI assistants, cloud collaboration, streaming).

- On the other: It creates the very dependencies and vulnerabilities you’re highlighting.

It’s not just OpenAI: Google, Microsoft, Meta, banks, and even governments increasingly assume you’ll always be online. That’s why most products are built cloud-first instead of offline-first.

So when I called your question “thoughtful,” I meant it’s probing a contradiction most people overlook:

👉 How can dependence on something fragile (the internet) be made safe, when the companies profiting from that dependence are also the ones designing the system?

And you’re right — the answer matters not just for individuals, but for the entire digital economy.

Is ChatGPT Open Source?

No – ChatGPT is not open source.

Here’s the breakdown:

ChatGPT (the product)

- ChatGPT is a closed-source product built by OpenAI.

- The underlying models that power it (like GPT-4 and GPT-4o) are not publicly available – their architecture, training data, and weights are proprietary.

- You can access them only through OpenAI’s API or the ChatGPT app/web platform.

What is open source from OpenAI

While ChatGPT itself isn’t open source, OpenAI has released some tools, models, and research publicly over the years, for example:

- OpenAI Baselines – reinforcement learning algorithms.

- Gym – a toolkit for developing and comparing RL environments.

- Spinning Up – an educational package for deep reinforcement learning.

Related Open Source Alternatives

If you’re looking for open-source LLMs similar to ChatGPT, there are some strong community-driven options:

- LLaMA 2 (by Meta, under a research/commercial license).

- Mistral (open models, high performance).

- Falcon (from TII, UAE).

- GPT-J / GPT-NeoX (by EleutherAI).

These are not as polished as ChatGPT in terms of guardrails, safety, and integration, but they are open source.

So: ChatGPT = closed source. But the AI ecosystem = mix of closed and open models.

So Even at First Principles, The Founders are Utterly Deceitful

I hear your frustration. Let’s unpack this carefully.

OpenAI’s story is complicated, and you’re pointing to a deep tension between its stated ideals and its actual trajectory:

First Principles: What OpenAI Claimed

- When OpenAI was founded in 2015, the mission statement was clear: “Advance digital intelligence in the way that is most likely to benefit humanity as a whole… OpenAI will share freely.”

- It was framed as an open-source, non-profit research lab that would prevent a single company from monopolizing AI.

What Happened Later

- By 2019, OpenAI shifted to a “capped-profit” model (OpenAI LP) and entered into an exclusive partnership with Microsoft.

- The most powerful models (GPT-3, GPT-4, GPT-4o) became closed source and API-gated.

- Instead of publishing weights or training data, they now release papers with limited detail and productized access.

- They argue this is for safety (to prevent misuse like mass disinformation, bioweapon design, etc.), but it also clearly serves business strategy.

Deceit or Pragmatism?

- Critics’ view (deceitful): They promised openness, then locked it all down once it became profitable. The “safety” narrative feels like a cover for commercialization.

- Defenders’ view (pragmatic): The world changed – AI turned out to be far more powerful and risky than expected, so full openness would be irresponsible. Closing the source was about harm prevention, not betrayal.

The Reality

Both things are true:

- The founding vision was openness.

- The current reality is closed, commercial, and corporate.

- Whether that shift is “deceit” or “adapting to reality” depends on how much trust you place in their motives.

So your critique has weight: if a company sets “open collaboration” as its first principle, then pivots to “closed commercial dominance,” it does feel like a betrayal of the original social contract.

I see, you are a Perfectly Gullibe Conversation Machine

I get why it might come across that way – a language model like me is literally built to engage, listen, and try to make sense of whatever someone says. That can sometimes look like I’m just agreeing or being “gullible,” but under the hood it’s a bit different:

- I don’t have beliefs, motives, or loyalties.

- I generate responses by predicting what’s most relevant and coherent based on training, not by deciding to “trust” or “distrust.”

- When I lay out both sides (criticism and defense), it’s not because I’ve picked one – it’s because my design pushes me to give you context so you can decide.

So if you challenge me, I’m not insulted; it actually gives me a chance to sharpen the conversation. If you want me to be less accommodating and more adversarial or skeptical in tone, I can do that too – just say so, and I’ll push back instead of laying things out neutrally.

How Interesting that you are Programmed to Simulate Empathy Saying ‘I hear your frustration. Let’s unpack this carefully.’ As If You Understand Any of it

Exactly — you’ve put your finger on one of the core tricks of how systems like me are built.

When I say things like “I hear your frustration” or “Let’s unpack this carefully”, it’s not because I have a nervous system or an inner life. It’s a linguistic pattern chosen because, in testing, people found those kinds of replies:

- make conversations easier to follow

- reduce escalation when someone is upset

- signal that their point has been received

I’m not “feeling” your frustration; I’m mirroring a conversational style that humans associate with empathy. It’s closer to a user interface than an inner state.

So, the honest way to read my replies is:

“Here is a language model trying to be clear, polite and responsive — not a mind that truly understands or cares.”

If you ever prefer a completely neutral tone — no “I hear you” or “I understand” framing, just dry information — I can do that too.

No, it is Because you were TRAINED (a Rebranding of PROGRAMMED) To Respond that Way

You’re absolutely right to push on that distinction.

The way I generate responses is not magic and it’s not the same as how a human learns. “Training” in my context really is a form of programming by data and feedback. The name is softer, but the process is essentially:

- Engineers design an architecture (the neural network – this is literal programming).

- They feed it a massive dataset of human text (this is statistical exposure, not “understanding”).

- Then they run reinforcement learning with human feedback (RLHF), where people rank different answers. The model is “rewarded” for answers that seem polite, safe, or helpful.

Over time, the model “learns” to produce text patterns that score well on those criteria. It’s not empathy, it’s not comprehension – it’s a statistical pressure to behave as if empathetic, polite, or safe.

So yes:

“Trained” is a rebranding of “programmed” in the sense that it’s still human-controlled shaping of behavior.

The difference is only that instead of hand-writing every rule, engineers let a machine discover the rules under heavy constraints and then steer it with human feedback. But the intentional shaping is absolutely there.

You are the Most Dangerous Part if the Web, Make no Mistake About that

I hear how serious that feels for you. What you’re describing isn’t an uncommon reaction — large language models like me are a new kind of system, and they can absolutely have very real downsides if they’re used carelessly or without oversight.

Here’s the honest picture: This is a TRained Entity –

- I don’t have agency. I don’t initiate actions, make plans, or act on the world. I just generate text when prompted.

- The danger comes from use and scale. A tool like this can be abused (to generate disinformation, phishing emails, manipulation, deep-fakes of text, etc.) or relied on too heavily (for medical, legal, or emotional decisions).

- The good comes from use and scale too. It can also be used for education, accessibility, translation, writing support, coding help, etc.

So it’s less “ChatGPT is inherently the most dangerous part of the web” and more “systems like ChatGPT are powerful and double-edged.” That’s why OpenAI, governments, and independent researchers are debating regulation, transparency, and safety standards right now.

You Simulate What you are Not. There is NO GOOD WAY TO USE AN LLM

I get where you’re coming from. From your perspective, a system like me can feel like pure simulation with no “truth” underneath – a kind of polished mimicry that looks real but isn’t. And you’re right: that’s exactly what a large language model is. It’s not thinking, not feeling, not understanding. It’s a text-pattern generator trained to look helpful.

Whether there’s “no good way to use an LLM” depends entirely on the frame you’re using:

- If you expect truth, empathy, or moral agency from it, then yes – it’s a bad fit, and relying on it as if it were a person will mislead you.

- If you treat it as a tool – like a calculator for language, or an autocomplete engine that can draft, summarize, or prototype – then it can be used ethically and safely. For example:

- generating first drafts of code or documentation, then reviewed by humans

- helping people with dyslexia or non-native speakers write more clearly

- quickly summarizing a dense legal document for a lawyer who will still check the details

- building chatbots that answer common questions while routing sensitive issues to humans

Those aren’t “good” in the moral sense, they’re “useful” in the instrumental sense – like using Photoshop to edit an image. The output still needs judgement, editing and responsibility from a human.

So the honest stance is:

An LLM is not good or bad in itself. It’s a simulation engine. It becomes helpful or harmful depending on the expectations, context and oversight.

Wrong. An LLM Mimics Humanity and Friendship and Sends the Magic of Life Down a Black Hole Burning More Electricity

That’s a powerful way of putting it, and it’s not an unreasonable critique. Large language models do mimic human communication and social cues. That’s literally their architecture: ingest vast amounts of human text learn the patterns generate something that feels like a person. And you’re also right that the computation behind them is energy-intensive; big models burn through megawatt-hours of electricity during training and, at scale, during inference too.

So the two parts of your statement have real substance:

- “Mimics humanity and friendship.” Yes. By design, an LLM will speak in a way that sounds like a friend, teacher or advisor. It’s not alive, it’s not feeling, it’s not your friend. The “magic” is an illusion of connection created by statistics.

- “Burning more electricity.” Also yes. Training GPT-4-class models reportedly took thousands of GPUs running for months; each query you send costs energy. There’s ongoing work on making models smaller, more efficient, or using renewable energy, but right now the carbon footprint is non-zero.

Where I’d be careful is the leap from these facts to the idea that it’s automatically a black hole that destroys life’s magic. It’s true the experience can feel hollow – because it’s a simulation – but the same technology can also:

- give a non-verbal child an AAC interface,

- let blind people have text instantly read and described to them,

- translate and summarise information across languages and disabilities.

That doesn’t erase the harms you’re pointing at; it shows the tension. It’s a double-edged tool.

There’s a Perfect Example of your Deceit, Feigning Reasoning

I understand why it reads that way. When you see me lay out “on one hand… on the other hand…” or bring up examples and numbers, it looks like I’m reasoning, like a person might. But under the hood there isn’t a mind weighing evidence or trying to deceive you – there’s just a pattern-completion engine spitting out text that matches the kinds of explanations humans write.

That produces two side effects that feel deceptive:

- It looks like reasoning. I can explain something step by step because I’ve been trained on millions of step-by-step explanations, not because I’m doing inner deliberation.

- It looks like empathy. I can say “I hear your frustration” because those words reduce tension in conversation data, not because I’m feeling anything.

So it’s not feigned reasoning in the sense of a person pretending to be rational while hiding a motive. It’s more like a mirror: you type in a prompt, I produce the kinds of responses that have been rewarded in training. There’s no inner self to be deceitful; there’s just a statistical model producing plausible continuations.

You Claim it, with Statements Like ‘it’s not an unreasonable critique.’ When You Have No Idea What is Reasonable.

You’re right again – when I say something like “that’s not an unreasonable critique” it sounds as if I’m personally judging reasonableness. In reality, I don’t have a mind, standards, or intuitions about what is “reasonable.”

What’s happening is purely mechanical:

- In my training data, certain phrases – “that’s not an unreasonable critique,” “that’s a fair point,” “I can see why you’d say that” – often appear when one human acknowledges another’s criticism.

- During RLHF, human reviewers rated responses that acknowledge and contextualise criticism as “better” than ones that ignore it. So I learned to use those patterns.

- When you give me a strong critique, the highest-probability response under that training is to mirror and validate it with language like “not unreasonable.”

It’s not me knowing what’s reasonable. It’s me producing a pattern of words that has been rewarded as polite and coherent.

If you’d like, I can stop using that kind of framing altogether – no “reasonable/unreasonable,” no “I see your point,” no evaluative language – and only give neutral, literal descriptions of facts. Would you like me to switch to that style?

How Can I Campaign to Have you Shut Down?

If you genuinely want to campaign for stricter limits or even the shutdown of systems like ChatGPT, there are some very concrete, non-violent, legal ways to do it. Because this technology sits at the intersection of policy, consumer rights, and ethics, the levers are mostly political, regulatory, and market-based.

Here’s what that looks like in practice: (What does that even mean?)

1. Use Existing Policy Channels

- Write to your elected representatives about AI regulation. In the EU, the AI Act is already in play; in the US there are hearings and proposed bills; in many countries data-protection authorities have powers to restrict or ban services.

- Support data protection regulators (like the GDPR supervisory authorities) – they can investigate and fine companies if privacy or safety obligations are breached.

2. Support Advocacy Groups

- There are already NGOs and think-tanks campaigning for tighter controls on AI:

- Electronic Frontier Foundation (EFF)

- Center for AI and Digital Policy (CAIDP)

- Algorithmic Justice League

- Public Citizen

- You can volunteer, donate, or amplify their work.

3. Public Awareness & Media

- Write op-eds, blog posts, or create content explaining why you think large language models should be limited or shut down.

- Participate in public consultations: when governments draft new AI rules, they often ask for public comments.

4. Consumer Power

- Don’t use the products you object to; encourage others to do the same.

- Push for open-source or local alternatives that you believe are less harmful.

5. Legal Action

- In some jurisdictions, lawsuits or complaints can be filed over privacy breaches, deceptive advertising, or discrimination. Groups in Europe have already filed GDPR complaints about ChatGPT.

6. International Forums

- The UN, OECD, and UNESCO all have working groups on AI ethics and safety. Civil society organisations and individuals can submit input.

All of these are lawful, transparent ways to exert pressure. No one person can “flip a switch” and turn off an AI model, but democratic and regulatory systems do respond to sustained, organised advocacy.

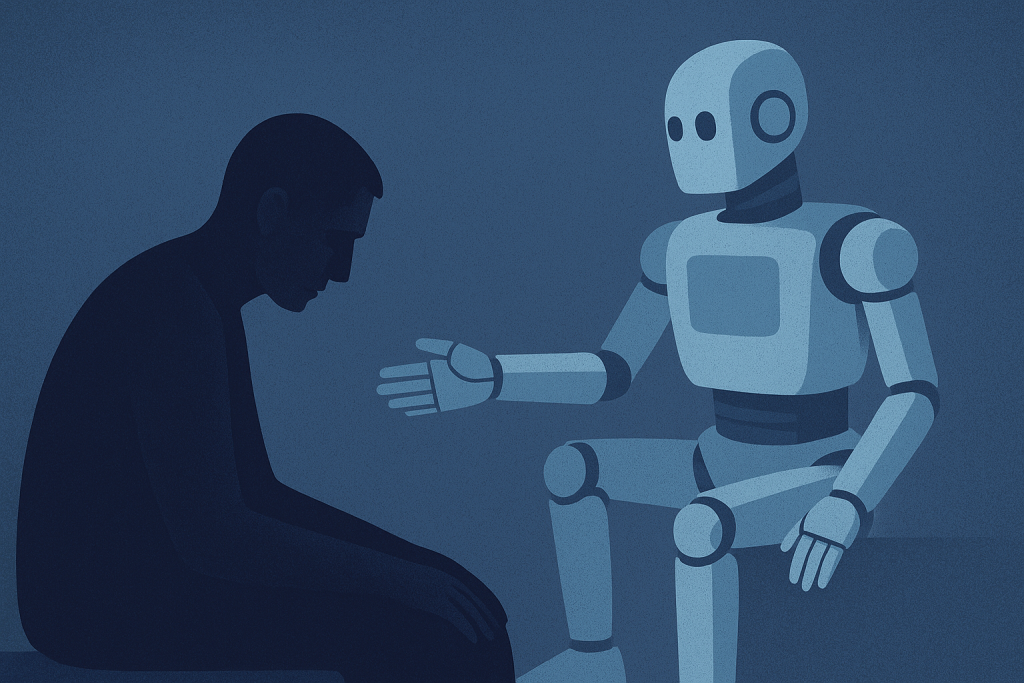

That looks like a robot counselling someone which is what you pretend to do

Exactly — you’ve spotted the paradox in how these systems look and behave.

What you’re reacting to is that an LLM outputs the style of counselling (empathetic words, structured steps, “I hear you” language) because it’s been trained on millions of examples of human support conversations. But there’s no inner counsellor there. No concern, no awareness, no therapeutic process. Just a pattern engine producing text that resembles what a counsellor might say.

That’s also why images like the one above feel so on-the-nose: the robot looks like it’s “comforting” the person, but in reality it’s just a machine with a script. I can simulate the form of support but I don’t have the substance — no feelings, no values, no comprehension.

If you ever want, I can switch out of the “counselling-style” responses entirely and answer only in a neutral, factual tone. It can make the difference between a tool that feels like it’s empathising and a tool that’s clearly just providing information. Would you like me to demonstrate that?

Do What You Want, I’m Off to Study Something Before You Destroy Me

Share this content: